I've started a YouTube channel for Algorithmic Art.

It will contain tutorials, worked examples and explorations.

Currently there is a playlist of tutorial videos to accompanying the creative coding for kids course:

Subscribing to the channel is the easiest way to be pinged when a new video goes up.

Let me know how you find the videos, I'm still learning how to make them, and if there are topics you'd like to see covered.

Saturday, 29 December 2018

Wednesday, 15 August 2018

Creative Coding - A Course for Kids

I am developing a creative coding course for young children.

This blog post will maintain the links to the course, and will grow as I cover more topics.

Check back periodically or follow @algorithmic_art for new projects and updates.

Update - a single website for this course is at https://sites.google.com/view/creative-coding-for-kids

Update - I've started a youtube channel which will include videos associated with this course. The playlist is at https://goo.gl/ZYwXwv

Many projects and courses give children something to type out and observe the results. In contrast, this course will gradually

The course will be based on p5js, a language designed to make creative coding easy for artists. It will use openprocessing.org which allows us to code and see the results entirely in the web - no need for complicated software installs and source code files. It also makes use of simple.js which we previously developed to reduce barriers to first time and younger coders.

Ideas are introduced and then used in later projects, so new coders should try to work through, or at least read through, all the projects.

They projects are designed to be small enough for children to try in a class or code club session. Parents and teachers are welcome to print out the PDFs.

However, you may find some of the completed code sketches useful:

recently started a CoderDojo in Cornwall for children aged 7-17,

This blog post will maintain the links to the course, and will grow as I cover more topics.

Check back periodically or follow @algorithmic_art for new projects and updates.

Update - a single website for this course is at https://sites.google.com/view/creative-coding-for-kids

Update - I've started a youtube channel which will include videos associated with this course. The playlist is at https://goo.gl/ZYwXwv

Creative Coding for Kids

The course is designed specifically for young children, especially those who may not have coded before. This means:- the projects are kept as small as possible

- the language used is kept as simple and friendly as possible

- good use is made of pictures

Many projects and courses give children something to type out and observe the results. In contrast, this course will gradually

- introduce and practice computer science concepts

- introduce and explain a programming language

The course will be based on p5js, a language designed to make creative coding easy for artists. It will use openprocessing.org which allows us to code and see the results entirely in the web - no need for complicated software installs and source code files. It also makes use of simple.js which we previously developed to reduce barriers to first time and younger coders.

Projects

The course is divided into a setup and three levels.- Level 0 - getting set up for the first time

- Level 1 - first steps for complete beginners

- Level 2 - gently introducing key ideas like functions and repetition.

- Level 3 - more interesting ideas for more confident coders

Ideas are introduced and then used in later projects, so new coders should try to work through, or at least read through, all the projects.

They projects are designed to be small enough for children to try in a class or code club session. Parents and teachers are welcome to print out the PDFs.

| Level 0 | |

|---|---|

| 00 - Getting Started (PDF) | Getting set up with openprocessing.org. Testing it works. |

| Level 1 | |

|---|---|

| 11 - First Shapes (PDF) | Creating simple shapes - circles and squares. Using shape fill colour. Getting started writing p5js code. |

| 12 - Coordinates & Size (PDF) | Learn to use coordinates to place shapes on the canvas. Setting shape size - circle diameter, square. |

| 13 - Random Numbers (PDF) | Replacing size numbers we've chosen with numbers chosen at random by our computer . Selecting colours from a list at random. Using randomness for shape location. |

| 14 - Simple Variables (PDF) | Challenge of moving a group of shapes, and how remembering numbers can help. Variables imagined as box containing a number. |

| Level 2 | |

|---|---|

| 21 - Simple Functions (PDF) | Idea of functions as reusable recipes of code. Demonstrating how functions can save lots of typing. Seeing how updating a function benefits all uses of it. |

| 22 - Repeating Things (PDF) | Introducing idea of computers as perfect for repetitive tasks. Demonstrating using a function 200 times. |

| 23 - More Functions (PDF) | Passing information (parameters) to functions. |

| 24 - Mixing Colour (PDF) | Introduce RGB colour mixing, and calculating colours. |

| 25 - More Loops (PDF) | Using loops counters to pass as parameters to functions. Use to calculate shape size/location, RGB colour. |

| 26 - Artistic Maths (PDF) | Introduce sine waves, after trying linear and squared functions. Use sine waves to create shape and colour patterns. |

| 27 - More Colour (PDF) | Introduce HSB as an alternative colour model. See how picking colours and calculating combinations is easier than with RGB. |

| 28 - Loops Inside Loops (PDF) | Introduce nested loops, show how they cover 2-dimensions. Practise creative uses of nested loop counters. |

| 29 - See-Through Colour (PDF) | Introduce colour translucency. See how translucency help make busy designs work better. |

| Level 3 | |

|---|---|

| 31 - No So Random Noise (PDF) | Introduce Perlin noise as more natural and less random than pure noise. Explore uses for creating textures and patterns from noise displacement. |

| 32 - Moving Around a Circle (PDF) | Learn how trigonometry can help us move around a circle. See how trigonometry can create interesting orbital patterns. |

| 33 - Patterns Inside Patterns (PDF) | Learn about self-similarity and recursion. See how recursion can easily create intricate patterns. Learn how to think about building recursive functions. |

| 34 - More Flexible Loops (PDF) | Learn about javascript's own for loops. See how they’re more flexible than the repeat instruction. |

| 35 - Classes and Objects (PDF) | Learn about classes and objects. Learn to use objects to model moving things like fireworks or ants. |

| 36 - Code That Creates Code (PDF) | Create our own turtle language, and write an interpreter for it. Evolve turtle code as an l-system to draw interesting patterns. |

Feedback

I'd welcome suggestions for improving the course. You can email me at makeyourownalgorithmicart at gmail dot com.

The first users of the course will be members of CoderDojo Cornwall.

Source Code

The course encourages children to type their own code and not copy large chunks from an existing listing. Where it is helpful to see working code, it will be printed in the course.However, you may find some of the completed code sketches useful:

recently started a CoderDojo in Cornwall for children aged 7-17,

Tuesday, 12 June 2018

Visualising The Riemann Zeta Function

This post, a little bit more mathematical than usual, describes my journey towards visualising a function called the Riemann Zeta Function, a function central to one of the world's most important unsolved mathematical challenges.

The motivation goes back to the mystery of prime numbers. Prime numbers are whole numbers which aren't the result of multiplying two other whole numbers. Here are some examples of numbers that are and aren't prime:

The idea of prime numbers is simple and many school children know what they are.

Despite that simplicity, the prime numbers have fiercely resisted a deeper understanding for centuries. They remain immune to even modern advanced mathematical tools. Simple questions like "what is the mathematical expression that gives us the nth prime number?" don't have an answer. Worse still, we can't prove that there is or isn't an answer to that question.

If we can't make progress on the question "where are the primes", the next best question is "how often do they occur?".

Let's think about how many prime numbers there are up to, and including, a number n. For example:

If we draw a picture of the number of primes up to a number n, we finally see what looks like a pattern:

We can see that for larger and larger n, the number of primes up to that n grows, which we expect because there are more primes under a bigger number than a smaller one.

What we also see is that the growth seems to slow down as n gets bigger. That makes intuitive sense, because the chances of a large number not being prime is greater than a smaller number, because there are more numbers underneath it that could be factors.

At the age of about 15, Gauss came up with a very good guess that the number of primes up to a number $n$, often written as $\pi(n)$, is

$$ \pi(n) \sim \frac{n}{ln(n)} $$

That's pretty amazing, not just because he was 15, but that he could do it without the aid of modern computers.

That expression $\frac{n}{ln(n)}$ is not exactly $\pi(n)$ but an approximation that gets better as $n$ gets larger and larger. This is basically the famous Prime Number Theorem.

Let's plot this expression $\frac{n}{ln(n)}$ and the actual values of $\pi(n)$. Here's a spreadsheet with prime numbers up to 100,000 [link].

That approximation is pretty good. But hang on - it looks like the two lines are diverging, not converging, as $n$ gets larger. Is the Prime Number Theorem wrong? No, the theorem is correct, but it doesn't say that the difference $\pi(n) - \frac{n}{ln(n)}$ gets smaller as $n$ gets larger. Instead it actually says that the ratio between the approximation and the actual values gets closer to 1 as $n$ gets larger and larger - a subtle difference:

$$ \lim \limits_{x \to \infty} \frac{ \pi(n) } { \frac{n}{ln(n)} } \to 1 $$

Euler, one of the greatest mathematicians of all time, was interested in infinite series, that is, series that go on forever.

Here's a simple example:

$$ \sum_{n=1}^{\infty} {n} = 1 + 2 + 3 + 4 + \cdots $$

As we keep adding these ever increasing numbers, the sum gets larger and larger and larger. Loosely speaking, the sum of this series is infinity.

What about this one?

$$ \sum_{n=1}^{\infty} {\frac{1}{2n}} = \frac{1}{1} + \frac{1}{2} + \frac{1}{4} + \frac{1}{8} + \cdots $$

We can see that numbers in this series get smaller and smaller and smaller. Adding these numbers, we can see that the sum gets closer and closer to 2. We can imagine that if we did somehow add the whole infinite series, the sum would be 2. Some people don't like that leap, and prefer to say that in the limit, as $n$ goes to infinity, the sum goes to 2. That's a perfectly fine way to think about it.

Let's look at another one:

$$ \sum_{n=1}^{\infty} {\frac{1}{n}} = \frac{1}{1} + \frac{1}{2} + \frac{1}{3} + \frac{1}{4} + \cdots $$

Looking at this series, we can see the numbers get smaller and smaller. In fact, each number is half the previous number. Does that mean the sum of the infinite series is some finite number? It may surprise you that the answer is no. This series, called the harmonic series, does actually grow to infinity, but does so very slowly.

The lesson from that last harmonic series is that it isn't always obvious whether an infinite series converges to a finite value, or whether it diverges to infinity.

Anyway, Euler was interested in series of this form:

$$ \zeta(s) = \sum_{n=1}^{\infty} {\frac{1}{n^s}} = \frac{1}{1^s} + \frac{1}{2^s} + \frac{1}{3^s} + \frac{1}{4^s} + \cdots $$

We can see there's a new variable $s$ which could be any number, like $s=4$ or $s=100$. For $s=2$ this series is

$$

\begin{align}

\zeta(2) = \sum_{n=1}^{\infty} {\frac{1}{n^2}} & = \frac{1}{1^2} + \frac{1}{2^2} + \frac{1}{3^2} + \frac{1}{4^2} + \cdots \\

& = 1+ \frac{1}{4} + \frac{1}{9} + \frac{1}{16} + \cdots \\

\end{align}

$$

Different values of $s$ give different series. The general form $\zeta(s)$ is called a zeta function.

It's natural to ask whether the series created by different values of $s$ converge. Maybe they all do? Maybe none do? Maybe some of them do and some don't.

Let's see what happens when $s=1$. The series becomes $\zeta(1) = \frac{1}{1} + \frac{1}{2} + \frac{1}{3} + \frac{1}{4} + \cdots$ which is the harmonic series we saw earlier, and we know this doesn't converge.

What about $s=2$. The series becomes $\zeta(2) = \frac{1}{1} + \frac{1}{4} + \frac{1}{9} + \frac{1}{16} + \cdots$. It turns out that this series does converge, and actually converges to $\frac{\pi^2}{6}$.

Is there a way to see which values of $s$ create series that do converge? The usual simple ratio test to see if a series converges is inconclusive.

Let's get creative! Have a look at the following picture:

We can see that the area of the rectangles is always less than the area under the curve. If the rectangles have width 1 and height $\frac{1}{n^s}$, that means:

$$ \sum_{n=1}^{\infty}\frac{1}{n^s} < 1 + \int_{1}^{\infty} \frac{1}{t^s} \, dt $$

Doing that integral is easy - just as we learned at school:

$$

\begin{align}

\int_{1}^{\infty} \frac{1}{t^s} \, dt & = \lim_{a \to \infty} \int_{1}^{a} \frac{1}{t^s} \, dt \\

& = \lim_{a \to \infty} \left[\frac{1}{1-s}t^{1-s}\right]_1^{a} \\

& = \lim_{a \to \infty} \frac{1}{1-s}a^{1-s}-\frac{1}{1-s} \\

\end{align}

$$

Now, we can see that as $a$ gets larger and larger towards $\infty$, the only way that expression is going to converge is if that $a^{1-s}$ doesn't grow larger and larger. That means $a$ must be raised to a negative number. That is, $1-s < 0$, or $s > 1$.

So, to summarise, if $ \zeta(s) = \sum_{n=1}^{\infty}\frac{1}{n^s} $ is always less than $ 1 + \int_{1}^{\infty} \frac{1}{t^s} \, dt $, which only converges if $s > 1$ .. we have our condition that the zeta function only converges if $s > 1$. Phew!

You can read more about this technique here [link], and I received help here [link].

Now that we know the $\zeta(s)$ function only works when $s > 1$, let's plot it.

The code to do this is really simple. We simply create an evenly spaced list of numbers, from 1.1 to 9.9, spaced every 0.1. We then calculate $\zeta(s)$ for all these values of $s$, and plot a graph. We could have hand-coded our own function to work out $\zeta(s)$ by summing, say the first 10 or 100 terms in the series, but we can use the convenience function provided by the popular mpmath library.

A python notebook showing the code is here:

We can see that for large $s$ the value of $\zeta(s)$ gets smaller and smaller towards 1. That makes sense, because the fractions in the series get smaller and smaller. We can also see that as $s$ gets closer to 1, the value of $\zeta(s)$ gets bigger and bigger.

Overall, we have to admit the visualisation isn't that interesting.

We have to ask ourselves, could this work? Does the series converge, and if so, where does does it converge and where doesn't it converge?

Previously we used an integral test to find for which real values of $s$ the zeta function converged. We arrived at an expression:

$$ \lim_{a \to \infty} a^{1-s} $$

which only converges if $s > 1$. Does this still work when s is complex? Let's set $s = x + iy$,

$$

\begin{align}

a^{1-s} & = a^{1-x-iy} \\

& = a^{1-x}a^{-iy}

\end{align}

$$

That first bit $a^{1-x}$ converges when $x>1$ which is saying that the real part of $s$ must be more than 1. That's good, it's compatible with our previous conclusion. We'd be in trouble if it wasn't!

The second bit $a^{-iy}$ can be rewritten as $e^{-iy\ln{a}}$ which has a magnitude of 1, and so doesn't effect convergence. Remember that $e^{i\theta} = \cos(\theta) + i\sin(\theta)$ which are the coordinates of a unit circle on the complex plane. As $a$ grows larger and larger, $a^{-iy}$ just orbits around a circle of radius 1.

So, the zeta function $\zeta(s)$ works when s is complex, as long as the real part of $s$ is more than 1.

This should be more interesting to visualise as the complex plane is two dimensional. The following shows a plot of the complex plane with real range 1.01 to 6.01 and imaginary range -2.5i to 2.5i. The colours represent the absolute value of $\zeta(s)$.

Of course, if we only look at the absolute value of the complex values of the feta function, we're losing information on the real and imaginary parts. So let's rerun the plot but this time base the colours on the real part of $\zeta(s)$.

And again, but with the imaginary part.

The code for creating this is plot is in a python notebook:

Looking at these plots, we can see there's a lot going on around the the point (1+0i). The plot of absolute values shows that $|\zeta(s)|$ grows large at this point. We can imagine a kind of stalagmite at this point! Interestingly the plot of the real part of the zeta function shows the action shifted right a little, and the plot of the imaginary part shows action between the point (1+0i) continuing to the right. worth coming back to this to explore it further.

Is that the only point of interest? Let's zoom out to a wider viewpoint with real part ranging from 1.01 to 31.01 and imaginary part -15i to +15i. The following shows all three absolute, real and imaginary part plots.

This wider view shows us that there is interesting stuff happening at points other than (1+0i). It looks like there might be something happening to the left of (1+14i) and (1-14i) ... but how can this be? Surely, the zeta function only exists on the complex plane where the real part if greater than 1. Hmmmm....

Let's make a contour plot to give us a different insight into the function. The lines of a contour plot try to follow similar values. The Python matplotlib has a convenient contours plot function.

Click on the following to take a closer look.

The viewport is larger with real part going from 1.01 to 41.01, and the imaginary part from -20i to +20i. The contour plots not only reveal more curious action along the left hand edge, they also give a clearer view of how the zeta function behaves near that edge.

The code for creating these contour plots is in the following python notebook:

It's instructive to combine the three kinds of plot (absolute value, real and imaginary parts) so we can see them together:

Let's now return to that question we asked earlier. Those contour lines seem to point to something that's happening off the left-hand edge, $\Re(s) = 1$. It's as if the pattern was brutally cut off before we could see it in its entirety.

Let's imagine what would happen if we continued those lines.

This is really interesting. The maths tells us that the contour lines must stop at $\Re(s) = 1$ because the zeta function doesn't converge for $s \le 1$. We can't argue with the maths. And yet, the picture suggests very strongly that the pattern does continue.

Curiouser and curiouser!

We already know that this series only converges if each successive term doesn't increase, that is $x < 1$. What if we used a complex number $z$ instead of a plain normal number $x$?

$$ f(z) = 1 + z + z^2 + z^3 + z^4 + z^5 + ... $$

How do we decide for which $z$ this converges? If we write $z$ in the polar coordinate form $z = r \cdot e^{i\theta}$, we can see that

$$ f(z) = 1 + r \cdot e^{i\theta} + r^2 \cdot e^{i2\theta} + r ^3 \cdot e^{i3\theta} + ... $$

The ratio test gives us

$$

\begin{align}

\lim_{n \to \infty} \vert \frac { r ^{n+1} \cdot e^{i(n+1)\theta}} { r^n \cdot e^{in\theta}} \vert & < 1 \\

\\

\lim_{n \to \infty} \vert r \cdot e^{i\theta} \vert & < 1 \\

\\

\vert r \vert & < 1 \\

\end{align}

$$

So the series converges as long as the complex number $z$ is within the unit circle, as shown in the next picture.

Now, let's take a different perspective on that series.

$$

\begin{align}

f(z) & = 1 + z + z^2 + z^3 + z^4 + z^5 + ... \\

z \cdot (f(z) & = z + z^2 + z^3 + z^4 + z^5 + z^6 + ... \\

1 + z \cdot (f(z) & = 1 + z + z^2 + z^3 + z^4 + z^5 + ... \\

& = f(z) \\

1 &= (1-z) \cdot f(z) \\

f(z) &= \frac{1}{1 - z} \\

\end{align}

$$

So we have a simple expression for the sum of that series. But this expression doesn't itself suggest that $\vert z \vert < 1$. It only suggests that $z \ne 1$.

What's going on ?!

One way to think about this is that $f(z) = \frac{1}{1 - z}$ is function which works over the entire complex plane, except at $z = 1$, and that the series $f(z) = 1 + z + z^2 + z^3 + z^4 + z^5 + ... $ is just a representation which works only for $\vert z \vert < 1$. In fact, it is just one of many representations. Another, slightly more complicated, representation is $f(z) = \int_0^{\infty} {e^{-t(1-z}}\,dt$ which works over $\Re(z) < 1$, which also covers the left-half plane.

What analytic continuation does is to take a representation which has a limited domain over which it works, and tries to find a different representation which works over a different domain. If we're lucky we might find the function that works everywhere, or at least the maximum possible.

There are several methods to do this analytic continuation, and they all depend on the fact that if two representations overlap, and have the same values there, then they are compatible in quite a strong sense. Actually doing the continuation is fairly heavy with algebra so we won't do it here. What we can do is get an intuitive feel for how and why analytic continuation works.

Have a look at the following, which shows the same zone of convergence of the series representation as before.

This time we're noting that the series is in fact the Taylor expansion of $f(z) = \frac{1}{1 - z}$ about $z=0$ and the radius of converge is the distance from $z=0$ to the nearest pole, which is at $z=1$ in this example. A pole is just a point where the function isn't defined and in the limit approaches infinity. Think of pole holding up a tent cloth!

Now, what happens if we take a Taylor series expansion of the same $f(z) = \frac{1}{1 - z}$ but about a different point, $z = 1 + 0.5i$, or example.

The resultant series, which is fairly easy to work out, has a radius of convergence centred about $z = 1 + 0.5i$ and extends to the pole. In fact that circle is smaller than our previous one because the pole is closer. But notice that this new domain covers a part of the complex plane that the previous circle didn't.

We've extended the domain! And we can keep doing it ..

We can keep doing this until we cover the entire complex plane, but excluding that pole of course. This technique is especially useful if we didn't know the true function, but only series representations which have limited domains.

Can this be done with the Riemann Zeta function, which in the series form, only works for $\Re(z) > 1$. Those contour plots strongly suggested it could be. The answer is yes! In fact it can be extended to the entire complex plane, and that continuation has only one pole at $z=1$ and many zeros. We won't do it here because the algebra is heavy.

What we can do is use software like the Python mpmath library to visualise the rest of the Riemann Zeta function.

We can see the values do continue naturally from that hard boundary we have before at $\re(z) = 1$. We can also see that the values are a lot more varying in the left half of the complex plane. To the right the function seems to be very calm, boring even.

Interestingly, we can see what look like zeros of the zeta function going up the line $\Re(z) = \frac{1}{2}$. That's worth a closer look.

Let's see what a contour plots can show us.

Those zeros have become really clear. There are three things to note:

For completeness, the following plots compare the real and imaginary contours. The values, which cover a very broad range, have been subjected to a natural log just to aid visualisation.

The code for these plots are online:

The following is a higher resolution plot combining the colour plot and the contours for absolute and real parts.

But, mathematicians have not yet proved that the other, so-called non-trivial zeros, are all on the vertical line $\Re(z) = \frac{1}{2}$.

If you can do that, or disprove it, you'll be awarded 1 million dollars.

In 1903, 15 non-trivial zeros of the Riemann Zeta function were found. By 2004, $10^{13}$ zeros were found, all on the line $\Re(z) = \frac{1}{2}$. That looks like extremely convincing evidence - but it is still not proof.

This challenge has been open since Riemann's 1859 paper, and was part of Hilbert's 1900 collection of unsolved important problems, as well as the Clay Institute's millennial problems set out in the year 2000.

Why? Prime Numbers.

Of all the things we can visualise, why this function?The motivation goes back to the mystery of prime numbers. Prime numbers are whole numbers which aren't the result of multiplying two other whole numbers. Here are some examples of numbers that are and aren't prime:

- 10 isn't a prime number because 10 is 5 times 2.

- 7 is a prime number, because we can't find two whole numbers that multiply together to give 7.

- 12 is not a prime number. There are several ways to make 12. For example, $12 = 4 \times 3$, and $12 = 6 \times 2$, and even $12 = 2 \times 2 \times 3$.

- The first few prime numbers are 2, 3, 5, 7, 11, 13, 17, 19, 23, 29, 31, 37, 41, 43, 47, ...

The idea of prime numbers is simple and many school children know what they are.

Despite that simplicity, the prime numbers have fiercely resisted a deeper understanding for centuries. They remain immune to even modern advanced mathematical tools. Simple questions like "what is the mathematical expression that gives us the nth prime number?" don't have an answer. Worse still, we can't prove that there is or isn't an answer to that question.

If we can't make progress on the question "where are the primes", the next best question is "how often do they occur?".

Let's think about how many prime numbers there are up to, and including, a number n. For example:

- the number of primes up to 5 is 3, namely 2, 3 and 5.

- the number of primes up to 10 is 4, namely 2, 3, 5 and 7.

- the number of primes up to 20 is 8, namely 2, 3, 5, 7, 11, 13, 17, and 19.

- the number of primes up to 100 is 25. We won't list them all here, but you find a list here [link].

If we draw a picture of the number of primes up to a number n, we finally see what looks like a pattern:

We can see that for larger and larger n, the number of primes up to that n grows, which we expect because there are more primes under a bigger number than a smaller one.

What we also see is that the growth seems to slow down as n gets bigger. That makes intuitive sense, because the chances of a large number not being prime is greater than a smaller number, because there are more numbers underneath it that could be factors.

At the age of about 15, Gauss came up with a very good guess that the number of primes up to a number $n$, often written as $\pi(n)$, is

$$ \pi(n) \sim \frac{n}{ln(n)} $$

That's pretty amazing, not just because he was 15, but that he could do it without the aid of modern computers.

That expression $\frac{n}{ln(n)}$ is not exactly $\pi(n)$ but an approximation that gets better as $n$ gets larger and larger. This is basically the famous Prime Number Theorem.

Let's plot this expression $\frac{n}{ln(n)}$ and the actual values of $\pi(n)$. Here's a spreadsheet with prime numbers up to 100,000 [link].

That approximation is pretty good. But hang on - it looks like the two lines are diverging, not converging, as $n$ gets larger. Is the Prime Number Theorem wrong? No, the theorem is correct, but it doesn't say that the difference $\pi(n) - \frac{n}{ln(n)}$ gets smaller as $n$ gets larger. Instead it actually says that the ratio between the approximation and the actual values gets closer to 1 as $n$ gets larger and larger - a subtle difference:

$$ \lim \limits_{x \to \infty} \frac{ \pi(n) } { \frac{n}{ln(n)} } \to 1 $$

There are better approximations than $\frac{n}{ln(n)}$, but they're not as simple, and we won't get into them now.

Around 1848-50, Pafnuty Chebyshev tried to prove the Prime Number Theorem. He made great progress, and proved a slightly weaker version. The theorem was finally proved in 1896, independently by both Jacques Hadamard and Charles Jean de la Vallée Poussin.

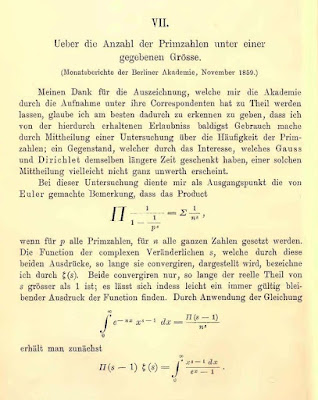

All of these great mathematicians used ideas from a chap called Bernhard Riemann who wrote a short but historic paper titled, "On the Number of Primes Less Than A Given Magnitude".

In just 10 pages he set out new ideas that have inspired generations of mathematicians even to this day. It's hard to overestimate the historic importance of his paper.

One of the new ideas Riemann introduced was the connection between the prime counting function $\pi(x)$ that we've been talking about, and a function we now call the Riemann Zeta function $\zeta(s)$. He discovered that the distribution of primes is related to the zeros of this zeta function. In fact he suggested that the zeros only occur when complex $s$ has real part $\frac{1}{2}$ and no-where else (except for trivial zeros at negative even numbers, -2, -4. -6, etc).

In concise maths, Riemann suggested:

$$ \zeta(s) = 0 \iff s = \frac{1}{2} + it$$

Sadly, he didn't prove it in his paper. And nobody has been able to prove that innocent looking statement since.

In fact this problem, the Riemann Hypothesis, is considered one of the most important mathematical unsolved problems today. It is one of the Clay Institute millennium challenges and a successful proof will attract a $1 million dollar prize.

We've talked about this important Riemann Zeta function but we haven't explained what it actually is. We'll do that next in a little more detail.

Around 1848-50, Pafnuty Chebyshev tried to prove the Prime Number Theorem. He made great progress, and proved a slightly weaker version. The theorem was finally proved in 1896, independently by both Jacques Hadamard and Charles Jean de la Vallée Poussin.

All of these great mathematicians used ideas from a chap called Bernhard Riemann who wrote a short but historic paper titled, "On the Number of Primes Less Than A Given Magnitude".

In just 10 pages he set out new ideas that have inspired generations of mathematicians even to this day. It's hard to overestimate the historic importance of his paper.

One of the new ideas Riemann introduced was the connection between the prime counting function $\pi(x)$ that we've been talking about, and a function we now call the Riemann Zeta function $\zeta(s)$. He discovered that the distribution of primes is related to the zeros of this zeta function. In fact he suggested that the zeros only occur when complex $s$ has real part $\frac{1}{2}$ and no-where else (except for trivial zeros at negative even numbers, -2, -4. -6, etc).

In concise maths, Riemann suggested:

$$ \zeta(s) = 0 \iff s = \frac{1}{2} + it$$

Sadly, he didn't prove it in his paper. And nobody has been able to prove that innocent looking statement since.

In fact this problem, the Riemann Hypothesis, is considered one of the most important mathematical unsolved problems today. It is one of the Clay Institute millennium challenges and a successful proof will attract a $1 million dollar prize.

We've talked about this important Riemann Zeta function but we haven't explained what it actually is. We'll do that next in a little more detail.

Riemann's Zeta Function

Before we dive into the Riemann Zeta function let's go back to basics and see how it came about.

Euler, one of the greatest mathematicians of all time, was interested in infinite series, that is, series that go on forever.

Here's a simple example:

$$ \sum_{n=1}^{\infty} {n} = 1 + 2 + 3 + 4 + \cdots $$

As we keep adding these ever increasing numbers, the sum gets larger and larger and larger. Loosely speaking, the sum of this series is infinity.

What about this one?

$$ \sum_{n=1}^{\infty} {\frac{1}{2n}} = \frac{1}{1} + \frac{1}{2} + \frac{1}{4} + \frac{1}{8} + \cdots $$

We can see that numbers in this series get smaller and smaller and smaller. Adding these numbers, we can see that the sum gets closer and closer to 2. We can imagine that if we did somehow add the whole infinite series, the sum would be 2. Some people don't like that leap, and prefer to say that in the limit, as $n$ goes to infinity, the sum goes to 2. That's a perfectly fine way to think about it.

Let's look at another one:

$$ \sum_{n=1}^{\infty} {\frac{1}{n}} = \frac{1}{1} + \frac{1}{2} + \frac{1}{3} + \frac{1}{4} + \cdots $$

Looking at this series, we can see the numbers get smaller and smaller. In fact, each number is half the previous number. Does that mean the sum of the infinite series is some finite number? It may surprise you that the answer is no. This series, called the harmonic series, does actually grow to infinity, but does so very slowly.

The lesson from that last harmonic series is that it isn't always obvious whether an infinite series converges to a finite value, or whether it diverges to infinity.

Anyway, Euler was interested in series of this form:

$$ \zeta(s) = \sum_{n=1}^{\infty} {\frac{1}{n^s}} = \frac{1}{1^s} + \frac{1}{2^s} + \frac{1}{3^s} + \frac{1}{4^s} + \cdots $$

We can see there's a new variable $s$ which could be any number, like $s=4$ or $s=100$. For $s=2$ this series is

$$

\begin{align}

\zeta(2) = \sum_{n=1}^{\infty} {\frac{1}{n^2}} & = \frac{1}{1^2} + \frac{1}{2^2} + \frac{1}{3^2} + \frac{1}{4^2} + \cdots \\

& = 1+ \frac{1}{4} + \frac{1}{9} + \frac{1}{16} + \cdots \\

\end{align}

$$

Different values of $s$ give different series. The general form $\zeta(s)$ is called a zeta function.

It's natural to ask whether the series created by different values of $s$ converge. Maybe they all do? Maybe none do? Maybe some of them do and some don't.

Let's see what happens when $s=1$. The series becomes $\zeta(1) = \frac{1}{1} + \frac{1}{2} + \frac{1}{3} + \frac{1}{4} + \cdots$ which is the harmonic series we saw earlier, and we know this doesn't converge.

What about $s=2$. The series becomes $\zeta(2) = \frac{1}{1} + \frac{1}{4} + \frac{1}{9} + \frac{1}{16} + \cdots$. It turns out that this series does converge, and actually converges to $\frac{\pi^2}{6}$.

Is there a way to see which values of $s$ create series that do converge? The usual simple ratio test to see if a series converges is inconclusive.

Let's get creative! Have a look at the following picture:

We can see that the area of the rectangles is always less than the area under the curve. If the rectangles have width 1 and height $\frac{1}{n^s}$, that means:

$$ \sum_{n=1}^{\infty}\frac{1}{n^s} < 1 + \int_{1}^{\infty} \frac{1}{t^s} \, dt $$

Doing that integral is easy - just as we learned at school:

$$

\begin{align}

\int_{1}^{\infty} \frac{1}{t^s} \, dt & = \lim_{a \to \infty} \int_{1}^{a} \frac{1}{t^s} \, dt \\

& = \lim_{a \to \infty} \left[\frac{1}{1-s}t^{1-s}\right]_1^{a} \\

& = \lim_{a \to \infty} \frac{1}{1-s}a^{1-s}-\frac{1}{1-s} \\

\end{align}

$$

Now, we can see that as $a$ gets larger and larger towards $\infty$, the only way that expression is going to converge is if that $a^{1-s}$ doesn't grow larger and larger. That means $a$ must be raised to a negative number. That is, $1-s < 0$, or $s > 1$.

So, to summarise, if $ \zeta(s) = \sum_{n=1}^{\infty}\frac{1}{n^s} $ is always less than $ 1 + \int_{1}^{\infty} \frac{1}{t^s} \, dt $, which only converges if $s > 1$ .. we have our condition that the zeta function only converges if $s > 1$. Phew!

You can read more about this technique here [link], and I received help here [link].

Now that we know the $\zeta(s)$ function only works when $s > 1$, let's plot it.

The code to do this is really simple. We simply create an evenly spaced list of numbers, from 1.1 to 9.9, spaced every 0.1. We then calculate $\zeta(s)$ for all these values of $s$, and plot a graph. We could have hand-coded our own function to work out $\zeta(s)$ by summing, say the first 10 or 100 terms in the series, but we can use the convenience function provided by the popular mpmath library.

A python notebook showing the code is here:

We can see that for large $s$ the value of $\zeta(s)$ gets smaller and smaller towards 1. That makes sense, because the fractions in the series get smaller and smaller. We can also see that as $s$ gets closer to 1, the value of $\zeta(s)$ gets bigger and bigger.

Overall, we have to admit the visualisation isn't that interesting.

Into the Complex Plane

Riemann took Euler's zeta function and explored what it looked like when the parameter $s$ is not just a whole number, or even a real number, but a complex number.We have to ask ourselves, could this work? Does the series converge, and if so, where does does it converge and where doesn't it converge?

Previously we used an integral test to find for which real values of $s$ the zeta function converged. We arrived at an expression:

$$ \lim_{a \to \infty} a^{1-s} $$

which only converges if $s > 1$. Does this still work when s is complex? Let's set $s = x + iy$,

$$

\begin{align}

a^{1-s} & = a^{1-x-iy} \\

& = a^{1-x}a^{-iy}

\end{align}

$$

That first bit $a^{1-x}$ converges when $x>1$ which is saying that the real part of $s$ must be more than 1. That's good, it's compatible with our previous conclusion. We'd be in trouble if it wasn't!

The second bit $a^{-iy}$ can be rewritten as $e^{-iy\ln{a}}$ which has a magnitude of 1, and so doesn't effect convergence. Remember that $e^{i\theta} = \cos(\theta) + i\sin(\theta)$ which are the coordinates of a unit circle on the complex plane. As $a$ grows larger and larger, $a^{-iy}$ just orbits around a circle of radius 1.

So, the zeta function $\zeta(s)$ works when s is complex, as long as the real part of $s$ is more than 1.

This should be more interesting to visualise as the complex plane is two dimensional. The following shows a plot of the complex plane with real range 1.01 to 6.01 and imaginary range -2.5i to 2.5i. The colours represent the absolute value of $\zeta(s)$.

Of course, if we only look at the absolute value of the complex values of the feta function, we're losing information on the real and imaginary parts. So let's rerun the plot but this time base the colours on the real part of $\zeta(s)$.

And again, but with the imaginary part.

The code for creating this is plot is in a python notebook:

Looking at these plots, we can see there's a lot going on around the the point (1+0i). The plot of absolute values shows that $|\zeta(s)|$ grows large at this point. We can imagine a kind of stalagmite at this point! Interestingly the plot of the real part of the zeta function shows the action shifted right a little, and the plot of the imaginary part shows action between the point (1+0i) continuing to the right. worth coming back to this to explore it further.

Is that the only point of interest? Let's zoom out to a wider viewpoint with real part ranging from 1.01 to 31.01 and imaginary part -15i to +15i. The following shows all three absolute, real and imaginary part plots.

This wider view shows us that there is interesting stuff happening at points other than (1+0i). It looks like there might be something happening to the left of (1+14i) and (1-14i) ... but how can this be? Surely, the zeta function only exists on the complex plane where the real part if greater than 1. Hmmmm....

Let's make a contour plot to give us a different insight into the function. The lines of a contour plot try to follow similar values. The Python matplotlib has a convenient contours plot function.

Click on the following to take a closer look.

The viewport is larger with real part going from 1.01 to 41.01, and the imaginary part from -20i to +20i. The contour plots not only reveal more curious action along the left hand edge, they also give a clearer view of how the zeta function behaves near that edge.

The code for creating these contour plots is in the following python notebook:

It's instructive to combine the three kinds of plot (absolute value, real and imaginary parts) so we can see them together:

Let's now return to that question we asked earlier. Those contour lines seem to point to something that's happening off the left-hand edge, $\Re(s) = 1$. It's as if the pattern was brutally cut off before we could see it in its entirety.

Let's imagine what would happen if we continued those lines.

This is really interesting. The maths tells us that the contour lines must stop at $\Re(s) = 1$ because the zeta function doesn't converge for $s \le 1$. We can't argue with the maths. And yet, the picture suggests very strongly that the pattern does continue.

Curiouser and curiouser!

Analytic Continuation

One of several strokes of genius that Riemann had was to resolve that puzzle above.

Have a look at the following infinite series:

$$ f(x) = 1 + x + x^2 + x^3 + x^4 + x^5 + ... $$

Have a look at the following infinite series:

$$ f(x) = 1 + x + x^2 + x^3 + x^4 + x^5 + ... $$

We already know that this series only converges if each successive term doesn't increase, that is $x < 1$. What if we used a complex number $z$ instead of a plain normal number $x$?

$$ f(z) = 1 + z + z^2 + z^3 + z^4 + z^5 + ... $$

How do we decide for which $z$ this converges? If we write $z$ in the polar coordinate form $z = r \cdot e^{i\theta}$, we can see that

$$ f(z) = 1 + r \cdot e^{i\theta} + r^2 \cdot e^{i2\theta} + r ^3 \cdot e^{i3\theta} + ... $$

The ratio test gives us

$$

\begin{align}

\lim_{n \to \infty} \vert \frac { r ^{n+1} \cdot e^{i(n+1)\theta}} { r^n \cdot e^{in\theta}} \vert & < 1 \\

\\

\lim_{n \to \infty} \vert r \cdot e^{i\theta} \vert & < 1 \\

\\

\vert r \vert & < 1 \\

\end{align}

$$

So the series converges as long as the complex number $z$ is within the unit circle, as shown in the next picture.

Now, let's take a different perspective on that series.

$$

\begin{align}

f(z) & = 1 + z + z^2 + z^3 + z^4 + z^5 + ... \\

z \cdot (f(z) & = z + z^2 + z^3 + z^4 + z^5 + z^6 + ... \\

1 + z \cdot (f(z) & = 1 + z + z^2 + z^3 + z^4 + z^5 + ... \\

& = f(z) \\

1 &= (1-z) \cdot f(z) \\

f(z) &= \frac{1}{1 - z} \\

\end{align}

$$

So we have a simple expression for the sum of that series. But this expression doesn't itself suggest that $\vert z \vert < 1$. It only suggests that $z \ne 1$.

What's going on ?!

One way to think about this is that $f(z) = \frac{1}{1 - z}$ is function which works over the entire complex plane, except at $z = 1$, and that the series $f(z) = 1 + z + z^2 + z^3 + z^4 + z^5 + ... $ is just a representation which works only for $\vert z \vert < 1$. In fact, it is just one of many representations. Another, slightly more complicated, representation is $f(z) = \int_0^{\infty} {e^{-t(1-z}}\,dt$ which works over $\Re(z) < 1$, which also covers the left-half plane.

What analytic continuation does is to take a representation which has a limited domain over which it works, and tries to find a different representation which works over a different domain. If we're lucky we might find the function that works everywhere, or at least the maximum possible.

There are several methods to do this analytic continuation, and they all depend on the fact that if two representations overlap, and have the same values there, then they are compatible in quite a strong sense. Actually doing the continuation is fairly heavy with algebra so we won't do it here. What we can do is get an intuitive feel for how and why analytic continuation works.

Have a look at the following, which shows the same zone of convergence of the series representation as before.

This time we're noting that the series is in fact the Taylor expansion of $f(z) = \frac{1}{1 - z}$ about $z=0$ and the radius of converge is the distance from $z=0$ to the nearest pole, which is at $z=1$ in this example. A pole is just a point where the function isn't defined and in the limit approaches infinity. Think of pole holding up a tent cloth!

Now, what happens if we take a Taylor series expansion of the same $f(z) = \frac{1}{1 - z}$ but about a different point, $z = 1 + 0.5i$, or example.

The resultant series, which is fairly easy to work out, has a radius of convergence centred about $z = 1 + 0.5i$ and extends to the pole. In fact that circle is smaller than our previous one because the pole is closer. But notice that this new domain covers a part of the complex plane that the previous circle didn't.

We've extended the domain! And we can keep doing it ..

We can keep doing this until we cover the entire complex plane, but excluding that pole of course. This technique is especially useful if we didn't know the true function, but only series representations which have limited domains.

Can this be done with the Riemann Zeta function, which in the series form, only works for $\Re(z) > 1$. Those contour plots strongly suggested it could be. The answer is yes! In fact it can be extended to the entire complex plane, and that continuation has only one pole at $z=1$ and many zeros. We won't do it here because the algebra is heavy.

What we can do is use software like the Python mpmath library to visualise the rest of the Riemann Zeta function.

We can see the values do continue naturally from that hard boundary we have before at $\re(z) = 1$. We can also see that the values are a lot more varying in the left half of the complex plane. To the right the function seems to be very calm, boring even.

Interestingly, we can see what look like zeros of the zeta function going up the line $\Re(z) = \frac{1}{2}$. That's worth a closer look.

Let's see what a contour plots can show us.

Those zeros have become really clear. There are three things to note:

- There are zeros up the line $\Re(z) = \frac{1}{2}$

- There are zeros at every negative even number on the real line, -2, -4, -6, -8, ...

- There is one pole at 1.

For completeness, the following plots compare the real and imaginary contours. The values, which cover a very broad range, have been subjected to a natural log just to aid visualisation.

The code for these plots are online:

- colour plots: https://drive.google.com/open?id=13CLcA8at9JoHQJw7lo0sPNucCcAabtJ2

- contour plots: https://drive.google.com/open?id=18jgeJg_8fa1ka4AxoC5Ek-AKdz7ZxW0i

The following is a higher resolution plot combining the colour plot and the contours for absolute and real parts.

Zeta Zeros - $1 Million Prize!

Mathematicians have proved:- there is only one pole in the version of the Riemann Zeta function extended to the entire complex plane

- there are zeros at $-2n$, the negative odd real numbers

But, mathematicians have not yet proved that the other, so-called non-trivial zeros, are all on the vertical line $\Re(z) = \frac{1}{2}$.

If you can do that, or disprove it, you'll be awarded 1 million dollars.

In 1903, 15 non-trivial zeros of the Riemann Zeta function were found. By 2004, $10^{13}$ zeros were found, all on the line $\Re(z) = \frac{1}{2}$. That looks like extremely convincing evidence - but it is still not proof.

This challenge has been open since Riemann's 1859 paper, and was part of Hilbert's 1900 collection of unsolved important problems, as well as the Clay Institute's millennial problems set out in the year 2000.

More Reading

- Nice easy-going video on visualising the Riemann Zeta function https://www.youtube.com/watch?v=sD0NjbwqlYw

- Barry Mazur "A Lecture on Primes and the Riemann Hypothesis" (video) https://www.youtube.com/watch?v=way0jAWpjZA

- One of the best explanations of the Riemann Hypothesis: https://medium.com/@JorgenVeisdal/the-riemann-hypothesis-explained-fa01c1f75d3f

- The official Clay Institute millennium challenge: http://www.claymath.org/millennium-problems/riemann-hypothesis

- English translation of Riemann's "One The Number of Primes Less Than A Given Magnitude": http://www.claymath.org/sites/default/files/ezeta.pdf

- A good overview of the Prime Number Theorem and the Riemann Hypothesis: https://thatsmaths.com/2014/02/27/the-prime-number-theorem/

- A very readable introduction to analytic continuation: http://www.mathpages.com/home/kmath649/kmath649.htm

- Another intro to analytic continuation showing other methods: https://www.colorado.edu/amath/sites/default/files/attached-files/pade_analytic_continuation.pdf

- Download the zeros of the Riemann Zeta function: http://www.dtc.umn.edu/~odlyzko/zeta_tables/

Wednesday, 23 May 2018

Artificial Life And Conway's Game of Life - Part 2/2

In the last post we look at how very simple rules for modelling how artificial life evolves can create some really interesting patterns and behaviours.

This time we'll take the idea and take it in a different direction - we'll turn the evolving landscape into a texture that maps onto the surface of a 3-d object.

To do that p5js uses webgl, a technology designed for efficiently rendering 3-d objects using a GPU in your computer if there is one. Lots of computer games use the same approach, taking advantage of the specially designed chips inside your computer or laptop.

In the past, using your GPU to accelerate graphics was really complex and hard - but today it has been made much easier. P5js also makes it easy.

Look at the following really simple and short code:

function setup() {

createCanvas(800, 600, WEBGL);

background('white');

}

function draw() {

box(200, 200, 200);

}

In the setup() function the first thing we notice is that the canvas is created with an extra WEBGL parameter - that tells p5js to create a special canvas the can render webgl objects. We then create a white background - easy.

In the draw function we have only one command and the name box() gives us a clue as to what it does - it creates a 3-dimensional box. Our box(200, 200, 200) creates a box with width, height and depth 200, a cube in other words.

Let's see the results:

That doesn't look like a 3-d cube, it looks like a flat square. The reason is that we're looking at the cube straight on. To see more than one face we need to rotate the cube. Let's do that:

function draw() {

rotateX(1.0);

box(200, 200, 200);

}

All we've done is add a command to rotate the box by 1 radian (about 57 degrees). Actually that's not quite right. That rotateX() command rotates the entire world around the x-axis. That means we should see the cube roll forwards or backwards.

Let's see the results:

That's much more like a 3-dimensional object. Let's see what happens if we combine a rotate about the x-axis as well as a rotation about the y-axis.

function draw() {

rotateX(1.0);

rotateY(1.0);

box(200, 200, 200);

}

And the result:

Nice! Now let's animate the cube by drawing frames with the cube at different rotations. We looked at animation previously.

Have a look at the code which has only grown a small bit:

// counter used to determine how much we rotate the 3-d world

var counter = 0;

function setup() {

createCanvas(800, 600, WEBGL);

}

function draw() {

// blank canvas for animation

background('white');

// rotate world

rotateX(counter);

rotateY(counter);

// draw box

box(200, 200, 200);

// increase counter

counter += 0.01;

}

We're blanking the canvas before we draw a frame. We're using the same rotations about the x and y axes as before but this time the angle is inside the variable counter, which we set to zero at the start of the code. We draw the box, and finally we increase counter by a small amount. That means the next frame will have the box drawn at a slightly different angle.

Let's see if this works:

That's looking good.

With very simple code we've rendered an animated 3-d object using p5js, and even taken advantage of the special hardware inside our computers to help accelerate the rendering.

The code for this cube is online at:

Let's do this with a simpler set of numbers first. Look at the following code, most of which should be familiar.

var img;

var counter = 0;

function setup() {

// create a WEBGL canvas

createCanvas(800, 600, WEBGL);

// create empty image and fill pixel by pixel

img = createImage(400, 100);

img.loadPixels();

for(var x = 0; x < img.width; x++) {

for(var y = 0; y < img.height; y++) {

// use noise() values 0-255 to create greyscale pixels

img.set(x, y, noise(x/40,y/10)*255);

}

}

img.updatePixels();

}

function draw() {

background('white');

// animate a 3-d box

push();

rotateX(counter);

rotateY(counter);

texture(img);

box(200, 200, 200);

pop();

// increase counter which determines rotation of box

counter += 0.01;

}

The second half of the code is the same as before - we rotate the world and draw a cube, and increase the counter which determines the rotation of the world at the next frame. There is a new instruction texture(img) which we can correctly guess as applying a texture to the surface of the 3-d object. We can even guess that img is the image that's applied as the texture.

The new code near the top creates that image img. Let's look at it more closely. We first create an empty image of size 400 by 100 using createImage(400, 100). To access the actual pixels, we need to use loadPixels() to expose them. We then use a loop with in a loop to visit every pixel and set it's value, which we do using the noise() function, which creates a less random randomness. Finally we need to use updatePixels() to apply those changes and commit them to the image.

That looks very hopeful.

The code for this textured cube is at:

While we're at it, let's change the shape from a box() to a torus().

To make sure the texture changes as the Game of Life patterns change, we need to update the texture image every frame.

The code is a little longer so we'll just point to it. It doesn't do anything more than what we've already discussed.

And the results ...

Very cool!

This time we'll take the idea and take it in a different direction - we'll turn the evolving landscape into a texture that maps onto the surface of a 3-d object.

3-D Objects in P5JS

Normally we use p5js to create 2-d shapes like lines, rectangles and circles, painted onto a canvas. We can also use p5js to create 3-d objects and move them in 3-d space.To do that p5js uses webgl, a technology designed for efficiently rendering 3-d objects using a GPU in your computer if there is one. Lots of computer games use the same approach, taking advantage of the specially designed chips inside your computer or laptop.

In the past, using your GPU to accelerate graphics was really complex and hard - but today it has been made much easier. P5js also makes it easy.

Look at the following really simple and short code:

function setup() {

createCanvas(800, 600, WEBGL);

background('white');

}

function draw() {

box(200, 200, 200);

}

In the setup() function the first thing we notice is that the canvas is created with an extra WEBGL parameter - that tells p5js to create a special canvas the can render webgl objects. We then create a white background - easy.

In the draw function we have only one command and the name box() gives us a clue as to what it does - it creates a 3-dimensional box. Our box(200, 200, 200) creates a box with width, height and depth 200, a cube in other words.

Let's see the results:

That doesn't look like a 3-d cube, it looks like a flat square. The reason is that we're looking at the cube straight on. To see more than one face we need to rotate the cube. Let's do that:

function draw() {

rotateX(1.0);

box(200, 200, 200);

}

Let's see the results:

That's much more like a 3-dimensional object. Let's see what happens if we combine a rotate about the x-axis as well as a rotation about the y-axis.

function draw() {

rotateX(1.0);

rotateY(1.0);

box(200, 200, 200);

}

And the result:

Nice! Now let's animate the cube by drawing frames with the cube at different rotations. We looked at animation previously.

Have a look at the code which has only grown a small bit:

// counter used to determine how much we rotate the 3-d world

var counter = 0;

function setup() {

createCanvas(800, 600, WEBGL);

}

function draw() {

// blank canvas for animation

background('white');

// rotate world

rotateX(counter);

rotateY(counter);

// draw box

box(200, 200, 200);

// increase counter

counter += 0.01;

}

We're blanking the canvas before we draw a frame. We're using the same rotations about the x and y axes as before but this time the angle is inside the variable counter, which we set to zero at the start of the code. We draw the box, and finally we increase counter by a small amount. That means the next frame will have the box drawn at a slightly different angle.

Let's see if this works:

That's looking good.

With very simple code we've rendered an animated 3-d object using p5js, and even taken advantage of the special hardware inside our computers to help accelerate the rendering.

The code for this cube is online at:

Array To Image To Texture

We want to apply our Game of Life patterns onto the surface of our 3-d object. To do that we need to work out how to turn an array of numbers into a texture. There isn't a direct way to do that - that I know of - we need to first turn the numbers into an "image" before it can be applied as a texture.Let's do this with a simpler set of numbers first. Look at the following code, most of which should be familiar.

var img;

var counter = 0;

function setup() {

// create a WEBGL canvas

createCanvas(800, 600, WEBGL);

// create empty image and fill pixel by pixel

img = createImage(400, 100);

img.loadPixels();

for(var x = 0; x < img.width; x++) {

for(var y = 0; y < img.height; y++) {

// use noise() values 0-255 to create greyscale pixels

img.set(x, y, noise(x/40,y/10)*255);

}

}

img.updatePixels();

}

function draw() {

background('white');

// animate a 3-d box

push();

rotateX(counter);

rotateY(counter);

texture(img);

box(200, 200, 200);

pop();

// increase counter which determines rotation of box

counter += 0.01;

}

The new code near the top creates that image img. Let's look at it more closely. We first create an empty image of size 400 by 100 using createImage(400, 100). To access the actual pixels, we need to use loadPixels() to expose them. We then use a loop with in a loop to visit every pixel and set it's value, which we do using the noise() function, which creates a less random randomness. Finally we need to use updatePixels() to apply those changes and commit them to the image.

That looks very hopeful.

The code for this textured cube is at:

Artificial Life as Texture

We've done all the hard work .. all we need to do is apply these ideas to the array that holds the population of the creatures that we modelled using Conway's Game of Life rules.While we're at it, let's change the shape from a box() to a torus().

To make sure the texture changes as the Game of Life patterns change, we need to update the texture image every frame.

The code is a little longer so we'll just point to it. It doesn't do anything more than what we've already discussed.

And the results ...

Very cool!

Resources

- Getting Started with WebGL in P5js - https://github.com/processing/p5.js/wiki/Getting-started-with-WebGL-in-p5

Sunday, 20 May 2018

Artificial Life And Conway's Game of Life - Part 1/2

One of the grandest ideas for an algorithm is to simulate life - to create artificial life itself!

That would be cool - but we don't really know how life works to a very deep level. Instead, we can work with a simplified model to see where that takes us.

In 1970 a British mathematician, John Conway, came up with such a simple model of life, which is now called Conway's Game of Life.

These creatures actually live in a landscape made of little cells. The little cells in which they sit are square, and so the landscape looks like grid of squares.

These little creatures are quite social and like company, just like most living things. But the company has to be just right - too crowded and they die, too lonely and they die too.

Conway designed four simple clear rules that decide whether these creatures love or die.

That is, if a creature has 0 or 1 neighbours, it will die and not appear in the next generation of the population.

That means, if a creature has 4, 5, 6, 7 or 8 neighbours, it dies of overcrowding.

This is a different kind of rule, because it works on empty cells, not already occupied cells.

Have a look at the following landscape of creatures. There are only 2 creatures sat next to each other.

What does the next generation of this population look like? We must apply the rules to find out:

So the next generation is empty - there are no surviving creatures.

Let's see how those rules apply to these creatures:

This means that the next generation will be exactly like this generation - there will be no change!

What happens to these creatures? Lets apply each of the rules:

So the population will have grown by one creature for the next generation.

In these examples, we've seen creatures die, creatures live and creatures be born.

Here are the main design decisions:

This works well. Here's an example of a 50x50 grid:

Cool!

We can see that at the initial random population starts to settle down into shapes that are static, and there are some oscillator in there too. Watching closely I can even see some nice cross patterns emerging before being obliterated by neighbouring creatures!

Before we move on, did you notice how there isn't much going on at the edges of that grid? The cells all around the edge become vacant very quickly. The reason is that we cheated a little by not considering the creatures in those edge cells to make the logic for counting neighbours easier. How do we count the neighbours for a cell on the edge of the grid? We could just count the ones that are on the grid - which makes perfect sense - but the code to make this a special case is a bit messy. So we've kept the code simple and just ignored the edge cells. For me at least, the resulting image is rather pleasing as the empty edges create a kind of frame for the patterns.

Another approach is to "wrap the grid around the edges". That is, if we walk off the edge of the grid, instead of falling off, we just re-appear on the other side. This means that when we count the neighbours for a cell on the edge of the grid, we include the cells that are on the other side of the grid. The following picture makes this clear.

We can keep the code simple, avoiding special cases for the edges, by using the javascript remainder function. The following code illustrates how coordinates (i,j) are modified to wrap around the grid:

var wrapped_i = (i + x + number_of_rows) % number_of_rows;

var wrapped_j = (j + y + number_of_columns) % number_of_columns;

The fancy term for this wrapping around is making the grid periodic. Here's the result:

If we watch carefully we can see populations on the edge move onto the opposite edge, and we also don't see the empty edges that we had before.

The code and sketch is online at:

In Part 2 we'll look at extending this basic idea of simple artificial life.

That would be cool - but we don't really know how life works to a very deep level. Instead, we can work with a simplified model to see where that takes us.

In 1970 a British mathematician, John Conway, came up with such a simple model of life, which is now called Conway's Game of Life.

Conway's Artificial Life

The living beings in Conway's game are very simple creatures that live according to really simple rules. Let's imagine them some character:These creatures actually live in a landscape made of little cells. The little cells in which they sit are square, and so the landscape looks like grid of squares.

These little creatures are quite social and like company, just like most living things. But the company has to be just right - too crowded and they die, too lonely and they die too.

Conway designed four simple clear rules that decide whether these creatures love or die.

Rule 1 - Loneliness

If a creature has less than 2 neighbours, it dies of loneliness.That is, if a creature has 0 or 1 neighbours, it will die and not appear in the next generation of the population.

Rule 2 - Just Right

If a creature has 2 or 3 neighbours, it is happy and continues to live into the next generation.Rule 3 - Overcrowding

If a creature has more than 3 neighbours, then it is overcrowded and sadly it dies.That means, if a creature has 4, 5, 6, 7 or 8 neighbours, it dies of overcrowding.

Rule 4 - Birth

If there is an empty cell surrounded by exactly 3 neighbours, then a new creature is born into this empty cell.This is a different kind of rule, because it works on empty cells, not already occupied cells.

Simple Worked Example 1

Let's look at some examples to make sure we understand how populations of these creatures evolve.Have a look at the following landscape of creatures. There are only 2 creatures sat next to each other.

What does the next generation of this population look like? We must apply the rules to find out:

- Looking at each of the creatures, we can see they are lonely, Each one has only 1 neighbour. That means they'll die of loneliness, and won't appear in the next generation.

- The other rules don't apply, there is no overcrowding, and there aren't enough neighbours to be "just right".

- No creatures are born in an empty cell, because no empty cell has exactly 3 neighbours.

So the next generation is empty - there are no surviving creatures.

Simple Worked Example 2

Let's look at a different, but still simple, example. There are now 4 creatures arranged in a square. How does this population evolve?Let's see how those rules apply to these creatures:

- Each one of the creatures has 3 neighbours. That's the "just right" amount, so the creatures will carry on living into the next generation. No creatures will die.

- There is no overcrowding, and there is no loneliness.

- No new creatures will be born as there are no empty cells with exactly 3 filled neighbours.

This means that the next generation will be exactly like this generation - there will be no change!

Simple Worked Example 3

Let's look at one more example. This landscape is similar to the previous one, but has one creature missing.What happens to these creatures? Lets apply each of the rules:

- Each of the creatures has 3 neighbours. That means it is happy and will live into the next generation.

- There are no lonely or overcrowded creatures, so no creatures will die.

- This time, a new creature will be born in that cell that had a creature in the previous example but doesn't here. This is because the empty cell has exactly 3 neighbours.

So the population will have grown by one creature for the next generation.

In these examples, we've seen creatures die, creatures live and creatures be born.

Let's Code It

Now that we've understood the rules let's write some code to actually simulate a population as it evolves. For now we'll keep the code simple so that we can stay focussed on the key ideas.Here are the main design decisions:

- The grid of cells is rectangular. That suggests a 2-dimensional array for storing the creatures. In many programming languages - eg Python - that's very easy to do. However, with Javascript, which underpins p5js, the language itself doesn't provide 2-dimensional arrays, only 1-dimensional lists. This means we just need to "unwrap" our 2-d array to a 1-d list, with each row following the next. The calculation for translating a position (i,j) in the 2d array is i + (rowsize * j) in the list. The following picture makes this clear.

- We create array (albeit unwrapped as a 1-d list) with 50 rows and 50 columns, and give it an initial random population. That means we visit each cell and use randomness to either put a creature in it, or leave it empty. We use a value of 1 to mean that there is a creature, and 0 for an empty cell. Actually, we'll be a bit biased towards emptiness, so we'll only fill a cell with a probability of 30%. In the code, we can do this using if (random() < 0.3) which is only true 30% of the time.

- We use the fact that the draw() function is called repeatedly to draw the grid and, then work out the next generation. This should give us an animation if the contents of the grid change. We saw how a repeatedly called draw() can be used to create animation in a previous post. We actually slow down the rate at which draw() is called to 5 frames per second using frameRate(5). The default frame rate is much higher, with is great for smooth animation, but not for watching how our creature populations evolve.

- We use the familiar loop within a loop to count rows j and columns i, as we visit each cell at (i,j) of the grid and consider how the rules apply. To work out the number of neighbours, we need to also visit the neighbours of this cell at (i,j). We use yet another loop within a loop to count the rows and columns immediately around this cell.

- We apply the rules and decide whether the creature lives on into the next generation. If it does, we don't write it into the current array grid_array, because that would make the next neighbour counts wrong. We need to put it into a temporary new array grid_array_2 Once all the cells have been visited, and a new temporary array completed, we point the name name grid_array at the new data, and leave javascript to free up the memory used for the now out of date data. If you're interested, this discussion on stackexchange confirms how/why this works.

This works well. Here's an example of a 50x50 grid:

Cool!

We can see that at the initial random population starts to settle down into shapes that are static, and there are some oscillator in there too. Watching closely I can even see some nice cross patterns emerging before being obliterated by neighbouring creatures!

Before we move on, did you notice how there isn't much going on at the edges of that grid? The cells all around the edge become vacant very quickly. The reason is that we cheated a little by not considering the creatures in those edge cells to make the logic for counting neighbours easier. How do we count the neighbours for a cell on the edge of the grid? We could just count the ones that are on the grid - which makes perfect sense - but the code to make this a special case is a bit messy. So we've kept the code simple and just ignored the edge cells. For me at least, the resulting image is rather pleasing as the empty edges create a kind of frame for the patterns.

Another approach is to "wrap the grid around the edges". That is, if we walk off the edge of the grid, instead of falling off, we just re-appear on the other side. This means that when we count the neighbours for a cell on the edge of the grid, we include the cells that are on the other side of the grid. The following picture makes this clear.

We can keep the code simple, avoiding special cases for the edges, by using the javascript remainder function. The following code illustrates how coordinates (i,j) are modified to wrap around the grid:

var wrapped_i = (i + x + number_of_rows) % number_of_rows;

var wrapped_j = (j + y + number_of_columns) % number_of_columns;

The fancy term for this wrapping around is making the grid periodic. Here's the result:

If we watch carefully we can see populations on the edge move onto the opposite edge, and we also don't see the empty edges that we had before.

The code and sketch is online at:

Simple Rules, Complex Behaviour

We've explored the very simple rules that Conway designed to model artificial life. It is striking that the rules are so simple - and yet the resulting patterns and their behaviours are quite interesting.In Part 2 we'll look at extending this basic idea of simple artificial life.

Resources

- Really fun online Conway's Game Of Life - https://bitstorm.org/gameoflife/

- The wikipedia article on Conway's Game of Life covers the various behaviours in an easy to read form - https://en.wikipedia.org/wiki/Conway%27s_Game_of_Life

Subscribe to:

Comments (Atom)